Case Studies | Facebook | Research and Analysis | Artificial Intelligence

The question facing any innovative company forging a brand-new path where regulation doesn’t yet exist is: Are we doing the right thing? Companies with huge artificial Intelligence development efforts including Google/Alphabet, OpenAI, Facebook/Meta, Apple, and Microsoft require moral soul searching. Just because you’re compliant with existing laws doesn’t make everything you’re doing right.

The question facing any innovative company forging a brand-new path where regulation doesn’t yet exist is: Are we doing the right thing? Companies with huge artificial Intelligence development efforts including Google/Alphabet, OpenAI, Facebook/Meta, Apple, and Microsoft require moral soul searching. Just because you’re compliant with existing laws doesn’t make everything you’re doing right.

More than twenty years ago, Enron, a company named by Fortune as “America's Most Innovative Company” for six consecutive years from 1996 to 2001, was revealed to have engaged in creatively planned accounting fraud.

Enron created super complex financial transactions that proved too intricate for its auditors, lawyers, shareholders, and analysts to understand. The company operated in gray areas of the laws on the books at the time, creating off-balance-sheet entities and complex structures which were approved by their accountants and lawyers. Enron, with some twenty thousand employees, went bankrupt.

I see remarkable parallels between Enron leading up to its bankruptcy and what the AI companies are doing today. The technologies that AI companies are creating are so complex that regulators and lawyers can’t figure out for sure if they are breaking the law. And like Enron in the late 1990s, these companies are certainly morally wrong in many ways.

What happened at Enron?

Several years ago, I spoke at the Pendulum Summit in Dublin. Andy Fastow, the chief financial officer at Enron during its 2001 collapse, was also speaking at the conference and we had ample time for lengthy one-on-one conversations over several meals.

Several years ago, I spoke at the Pendulum Summit in Dublin. Andy Fastow, the chief financial officer at Enron during its 2001 collapse, was also speaking at the conference and we had ample time for lengthy one-on-one conversations over several meals.

Andy, who had been named CFO of the Year by CFO magazine, was charged with 78 counts of fraud, and served a six-year prison sentence.

I was fascinated speaking with Andy, probing his experiences at Enron. He told me that much of what Enron was doing was technically legal but morally suspect. He said that when people celebrate your success, naming your company the most innovative and you’re written about as being at the top of your profession, it tends to go to your head. You begin to think you can do no wrong.

“I got both an award for CFO of the year and a prison card for doing the same deals,” Andy says. “How’s that possible? How is it possible to be CFO of the year and to go to federal prison for the same thing?

“I got both an award for CFO of the year and a prison card for doing the same deals,” Andy says. “How’s that possible? How is it possible to be CFO of the year and to go to federal prison for the same thing?

I didn’t take notes when Andy and I spoke one-on-one, so I’ve pulled some quotes from interviews with Andy in strategiccfo360 and Fraud Magazine as well as a recap of Andy’s talk at Pendulum Summit.

“I always tried to technically follow the rules, but I also undermined the principle of the rule by finding the loophole,” Andy says. “I think we were all overly aggressive. If we ever had a deal structure where the accountant said, ‘The accounting doesn't work,’ then we wouldn't do those deals. We simply kept changing the structure until we came up with one that technically worked within the rules.”

Artificial Intelligence and the new frontier

Over the past several years, I’ve been fascinated by AI, writing frequently about both the good and bad aspects of this new technology.

In my article titled Presidential Election 2024: How Artificial Intelligence is Rewriting the Marketing Rules, I wrote about how the AI amplification of fake content via social media including YouTube and Facebook is likely to pose a major risk to democracy during the upcoming U.S. election cycle.

The sad truth is the Facebook AI Newsfeed rewards anger, conspiracy, and lies because that tends to get people to stay on the service longer. YouTube's AI engine is similar. The Facebook AI algorithm leads tens of millions of its nearly three billion active global users into an abyss of misinformation, a quagmire of lies, and a quicksand of conspiracy theories.

As fake content is generated and amplified via the social networks’ AI, millions of voters may not know the truth about what they are seeing. This has real power to sway elections, especially if something dramatic but fake is released in the days or hours before the election on Tuesday, November 5, 2024.

The thousands of brilliant people at Facebook and YouTube clearly understand their AI is powering conspiracy theories, polarization, and hate.

However, Meta and Alphabet seem to be operating within current laws, just as Enron was. It’s not clear they’re paying enough attention to the moral issues of what their AI has created.

“There’s a tendency when a company is successful that the people who should be natural skeptics become obsequious,” Andy says. “Instead of challenging what you’re doing, they want to be part of the success. They don’t do their jobs.”

What's coming next?

I see many parallels between Enron and AI companies.

Ridiculously complex AI algorithms are like Enron’s complex financial transactions. The accountants and lawyers who worked with Enron didn’t understand the math. Now, even the very people who build AI companies don’t fully understand how their technology works.

Thousands of articles have been written about the brilliance of ChatGPT from OpenAI. Reuters cited a UBS study that says ChatGPT is estimated to have reached 100 million monthly active users just two months after launch, making it the fastest-growing consumer application in history.

The brilliant minds at companies like OpenAI are focused on getting the technology out there, even if there are negative aspects to it, possibly including having AI turn against humans in the future. The tech is just too new to have legal roadblocks (yet).

In an article in the upcoming September 2023 issue of The Atlantic titled Does Sam Altman Know What He’s Creating?, the CEO of OpenAI, the company behind ChatGPT says that his employees, “often lose sleep worrying about the AIs they might one day release without fully appreciating their dangers.”

So at least they are aware of the dangers. However, just like several decades ago with Enron, there is no serious regulation currently in place to stop them from doing what they want.

Altman says there’s a chance that so-called Artificial General Intelligence (which is still years or decades away) has the possibility of turning against humans. “I think that whether the chance of existential calamity is 0.5 percent or 50 percent, we should still take it seriously,” Altman says. “I don’t have an exact number, but I’m closer to the 0.5 than the 50.”

AI companies playing fast and loose with the law

As I’ve played around with chatbots like ChatGPT, I’ve found content from my books and other writing within the results. Clearly, the models have been trained on copyrighted data and in the case of books, illegal copies.

Scraping content that’s copyrighted and using it is, like Enron’s financial transactions, a legal gray area. It’s morally wrong to steal data but is it against the law?

We may know soon because Sarah Silverman is suing OpenAI and Meta for copyright infringement.

And it looks like the regulators are sniffing around. The Federal Trade Commission has opened an expansive investigation into OpenAI, probing whether the ChatGPT bot has run afoul of consumer protection laws by putting personal reputations and data at risk.

Enron’s Andy Fastow says: “We devolved into doing deals where the intent — the whole purpose of doing the deals — was to be misleading. Again, the deal technically may have been correct, but it really wasn't because the intent was wrong. All of the deals were technically approved by our attorneys and accountants. I wouldn't expect the typical fraud examiner to have enough of an auditing or accounting background to be making determinations whether the accounting is correct.”

Other morally suspect issues with AI chatbots include the teaching people how to make meth or a bomb or how to write more realistic sounding s*%& email.

And AI will take away people’s jobs. “A lot of people working on AI pretend that it’s only going to be good; it’s only going to be a supplement; no one is ever going to be replaced,” OpenAI’s Altman says. “Jobs are definitely going to go away, full stop.”

Ethics and AI companies

Given that Alphabet/Google/YouTube and Meta/Facebook have had years to tune their AI algorithm to avoid rewarding conspiracy and hate and they haven’t, I don’t have much hope that they will suddenly do the morally right thing.

Consider another morally ambiguous position of the big companies playing in the AI world, corporate taxes. Companies like Apple were founded in the USA and most of their employees work in this country. Apple proudly says their hardware is “Designed in California”. Yet their corporate structures, which are legal under current laws, allow them to be technically headquartered overseas to avoid paying taxes in the United States.

“Ireland is the international headquarters for one of the most valuable companies in the world, Apple computer,” Andy said in Dublin at Pendulum Summit. “Why is that? Why? Taxes! It is the Irish tax structure. It’s great and it’s a big part of your allure, helping companies avoid paying taxes. The problem is one day people may wake up and think differently and there may be a populist revolt around the world. They may say who is helping those rich billionaires avoid paying taxes and they may not feel the same way about Ireland in the future.”

When asked for his advice on ethical issues, Andy says that leaders of companies: “should think of generic questions like, ‘If I own this company and I were leaving it to my grandchildren would I make this decision?’ A simple question like that would have caught 99 percent of the fraud that went on at Enron, because the answer would have been ‘No’. This forces you to go through the thought process of legitimizing why you're doing it.”

Is government regulation of AI coming?

Lindsey Graham, the senior Republican senator from South Carolina and Elizabeth Warren, the senior Democratic senator from Massachusetts are proposing a new Digital Consumer Protection Commission Act.

In a New York Times opinion piece, Graham and Warren say the Act “would create an independent, bipartisan regulator charged with licensing and policing the nation’s biggest tech companies — like Meta, Google and Amazon — to prevent online harm, promote free speech and competition, guard Americans’ privacy and protect national security. The new watchdog would focus on the unique threats posed by tech giants while strengthening the tools available to the federal agencies and state attorneys general who have authority to regulate Big Tech.”

It appears that the Act is squarely aimed at AI. “Americans deserve to know how their data is collected and used and to control who can see it. They deserve the freedom to opt out of targeted advertising. And they deserve the right to go online without, say, some A.I. tool’s algorithm denying them a loan based on their race or politics. If our legislation is enacted, platforms would face consequences for suppressing speech in violation of their own terms of service. The commission would have the flexibility and agility to develop more expertise and respond to new risks, like those posed by generative A.I.”

It appears that the Act is squarely aimed at AI. “Americans deserve to know how their data is collected and used and to control who can see it. They deserve the freedom to opt out of targeted advertising. And they deserve the right to go online without, say, some A.I. tool’s algorithm denying them a loan based on their race or politics. If our legislation is enacted, platforms would face consequences for suppressing speech in violation of their own terms of service. The commission would have the flexibility and agility to develop more expertise and respond to new risks, like those posed by generative A.I.”

I don’t know enough about this potential legislation to figure out if I support it.

Enron’s collapse led to major legislation including the Sarbanes–Oxley Act of 2002, a federal law that mandates certain practices in financial record keeping and reporting for corporations.

Let’s hope that AI companies figure out how to do the right thing before AI regulation must be created because of a similarly spectacular collapse of an AI company.

I'll be delivering a talk at HubSpot's INBOUND conference next month titled: How to Get Found in LLM AI Search Like ChatGPT-Powered Bing. In my talk, perhaps I'll go off an an Enron tangent...

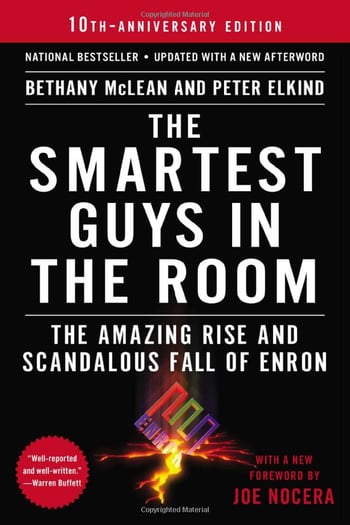

For much more on what happened at Enron, I highly recommend the book The Smartest Guys in the Room: The Amazing Rise and Scandalous Fall of Enron.